AI chatbots are now learning from library books: Complete Overview of what's happening

- Graziano Stefanelli

- Jun 16, 2025

- 7 min read

How Harvard’s 1 million volumes are going to make AI models smarter and more culturally informed.

Libraries open archives to help AI learn from centuries of human knowledge instead of the modern web. This project uses around 1 million scanned library books—spanning hundreds of years and over 250 languages—to give AI better and more reliable material to learn from. Using rare manuscripts, research books, and cultural records as training data, the goal is to build chatbots that reason more clearly, avoid messy web data, and understand a much wider range of human knowledge.

What’s Happening?

In the technology and library world, there is a new effort to make AI chatbots and language models smarter by changing the material they use for learning. Instead of relying almost entirely on content from social media, websites, and online discussions, leading technology companies and academic organizations are shifting toward using large collections of real books, digitized and curated by respected libraries.

A recent milestone is the decision by Harvard Library to make almost one million books available for use in training artificial intelligence. These are all public domain works, meaning there are no copyright restrictions. The initiative involves OpenAI, Microsoft, and several academic research teams. Their shared goal is to train AI with material that is more factual, better preserved, and rooted in centuries of human knowledge.

Who’s Involved?

A project of this size requires collaboration between several key institutions. Harvard University Library has provided the main collection. Other contributors include the Boston Public Library, while Microsoft and OpenAI are offering financial and technical support. These organizations recognize that higher-quality input data leads to better AI performance.

Harvard’s participation is only the beginning. As more institutions contribute, the training material for AI will become broader and more reliable. The long-term goal is for AI to learn from the most respected sources, with oversight from knowledge preservation experts.

What Kind of Books Are Included?

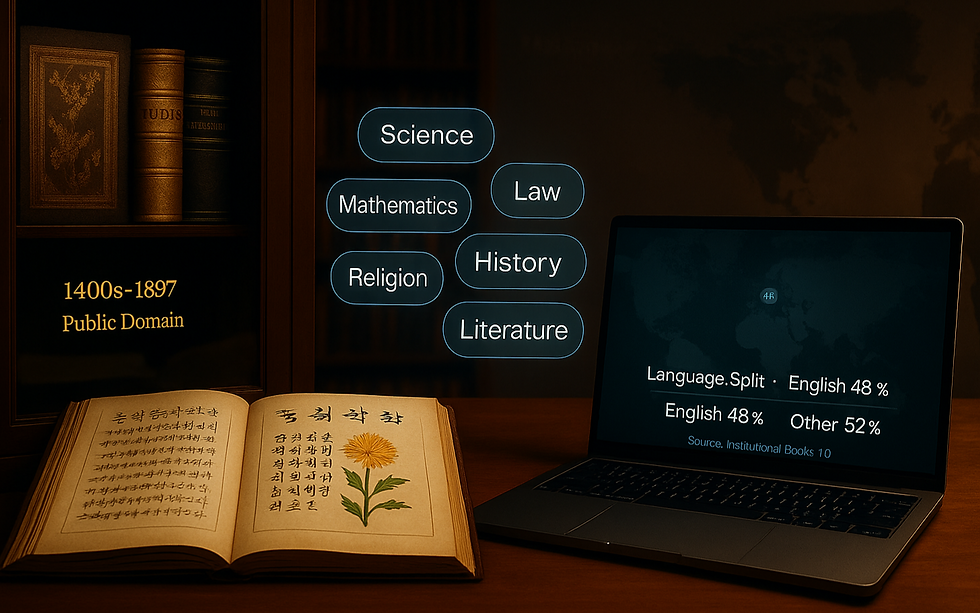

The project includes rare, historic, and academic works, with some dating back to the 1400s. Topics range across science, mathematics, law, philosophy, history, religion, and literature. Some are handwritten manuscripts or culturally unique texts, such as ancient Korean botany notes. Crucially, the collection is not dominated by English. Less than half of the books are in English; the rest cover over 250 languages, including German, French, Italian, Spanish, and Latin. This linguistic spread allows AI to gain a more global understanding.

Let's have a deeper look at what’s inside the “Institutional Books 1.0” corpus...

Scale & time-span – 983 004 public-domain volumes (≈ 394 million scanned pages) dating from the 1400s through 1927. The oldest item highlighted by curators is a 15th-century Korean manuscript on flower cultivation; the biggest single slice of the set comes from the 19th century, when inexpensive printing exploded and scholarly publishing flourished.

Top subject clusters – Library metadata shows dense pockets of literature, philosophy, law, agriculture, natural science, and medicine. These categories line up with Harvard’s historic strengths (e.g., early botanical studies, Latin legal commentaries, Enlightenment philosophy tracts).

Handwritten & “niche” material – Roughly 3 % of items are manuscripts rather than print, many photographed page-by-page. Examples include student lecture notes from 18th-century chemistry courses and vernacular prayer books in Sámi and Aymara. Such one-off texts give models exposure to spelling variation and non-standard grammar that rarely appear on the open web.

Language spread – Fewer than 50 % of tokens are English. A preliminary breakdown in the technical report lists German (~9 %), French (~6 %), Latin (~4 %), Spanish (~3 %), Italian (~3 %) as the next largest blocks, with the long tail covering 250 + other languages, from classical Greek to Swahili to 19th-century Ottoman Turkish. This diversity is intended to help future chatbots answer in—or at least recognize—far more languages than today.

Token quality over quantity – Many volumes are scholarly editions with footnotes, indices, and tables, producing long, structured sentences and dense domain vocabulary. Researchers expect this to sharpen models’ logical-reasoning skills compared with forum posts or social-media chatter.

Moving training data “down the stack” from recent internet text to centuries of curated print culture does two things:

(1) it gives AI clearer provenance, sidestepping copyright fights; and

(2) it injects high-signal, multilingual, domain-rich prose that can improve factual grounding, reduce bias toward modern Anglo-centric sources, and teach models how scholars have argued, cited, and classified knowledge for hundreds of years.

••••

When Will the Library Data Be Available?

Harvard’s first public set—Institutional Books 1.0, about 1 million public-domain volumes—went live on Hugging Face on 10 June 2025 for free, non-commercial research. Create a free Hugging Face account, click “Agree to Terms”, and you can start downloading or streaming roughly 3 TB of clean text (242 billion tokens) straightaway.

The Institutional Data Initiative will add new batches through late 2025 as partners like Boston Public Library finish scanning, then publish a looser-licensed “2.0” once metadata and filters are finalized. Expect periodic drops—historic newspapers, government papers, niche-language troves—with each release announced on the dataset card; 2.0 should open the door to most commercial training without extra paperwork.

Large models from Microsoft and OpenAI are unlikely to fold in the full corpus before early 2026, but pilot training is already happening, and smaller teams can begin experimenting today. Think of 2025 as the “early-access” phase: individual researchers fine-tune now, while enterprise systems wait for quality audits and bias checks before mass adoption.

How Can You Start Using the Dataset Right Now?

Step | What to Do | Why It Matters |

1. Sign Up | Open a free account at Hugging Face. https://huggingface.co/ | Required for dataset terms tracking. |

2. Accept Terms | On the “institutional/institutional-books-1.0” page, click “Agree to Terms” (non-commercial use, attribution, no redistribution). https://huggingface.co/datasets/institutional/institutional-books-1.0/tree/main?utm_source=chatgpt.com | Grants instant read access. |

3. Choose a Method | • Browser download for small samples (parquet shards) • Git LFS or HF CLI for multi-GB pulls: huggingface-cli download --resume | CLI or LFS avoids browser time-outs and resumes if the connection drops. |

4. Mind the Storage | Each shard is ~2-3 GB; full set is ~3 TB. Use cloud buckets (AWS S3, Azure Blob) or local drives with ≥4 TB free. | Prevents corruption or incomplete merges. |

5. Load Efficiently | In Python: python<br>from datasets import load_dataset<br>ds = load_dataset("institutional/institutional-books-1.0", split="train", streaming=True)<br> | Streaming mode means you don’t need to hold everything on disk—handy for limited hardware. |

6. Respect the Rules | Keep any shared outputs highly transformed (e.g., analysis charts, embeddings) and always cite: Institutional Books, Institutional Data Initiative, Harvard Library. | Stays within Early-Access license and builds community trust. |

Once you’re ready to access the dataset, first decide if you want just a small piece or the whole thing. For a small sample, simply download a file directly from the Hugging Face website—this is quick and works well for quick tests or curiosity. If you need the full dataset, use the Hugging Face CLI (a command-line tool) or Git LFS, which are better suited for handling huge downloads.

You can find instructions for these tools on the dataset’s Hugging Face page.

Make sure you have enough storage space. If your computer doesn’t have at least several terabytes free, consider using an external drive or setting up a cloud storage solution like AWS or Azure.

If you’re working with code, especially in Python, use the Hugging Face “datasets” library. It has a streaming mode, which means your program loads data as needed, without filling up your drive all at once. For example, you can run a script that reads the books one by one, analyzes them, or pulls just a certain language or subject.

Throughout the process, keep track of what you’re doing with the data, cite Harvard and the Institutional Data Initiative in your work, and don’t try to reshare the full dataset. If you want to publish results, make sure you’re only sharing summaries, analyses, or models, not the original book files themselves.

If you run into any issues, Hugging Face’s documentation and discussion forums can help troubleshoot download or setup problems.

_________

Why Use These Books for AI?

Data Quality and Reliability. Library books are curated by professionals. They come with known authors, dates, and trusted publishing origins, unlike random internet text.

Diversity and Depth. The variety of languages, topics, and historical contexts introduces AI to global cultures and intellectual traditions that are absent from typical online data.

Logical and Structured Content. Older texts often follow structured reasoning and step-by-step analysis, which helps AI models develop better logic and critical thinking.

Clear Data Provenance. These books offer transparent, traceable sources, reducing legal risks and improving data ethics and accountability.

What Are the Challenges and Concerns?

Problematic or Outdated Content. Some books include racist, sexist, or otherwise biased viewpoints. Teams are working on flagging and filtering such content appropriately.

Resource Requirements. Scanning and digitizing old volumes is expensive and labor-intensive. Not all libraries can manage this without external funding and support.

Balancing Access and Oversight. While the material is being opened up, libraries want to maintain control over how it’s used, ensuring that historical materials are treated responsibly.

How Will These Books Be Used and Shared?

The digitized collections are being uploaded to Hugging Face, a platform widely used by AI researchers and developers. Publishing the data here ensures broad access, including for open-source communities. At the same time, participating libraries retain oversight and influence. This ensures that their contributions are used ethically and with cultural respect.

Why Does This Matter for the Future of AI?

Until now, most AI systems were trained on internet content, much of it unverified or shallow. This project introduces a higher standard of training material—one that is more reliable, diverse, and historically grounded. However, this new approach also creates important questions: how to prevent the spread of harmful historical ideas, and how to ensure AI properly understands context, nuance, and cultural meaning. Solving these problems will be central to building more intelligent, responsible AI systems.

__________

FOLLOW US FOR MORE.

DATA STUDIOS